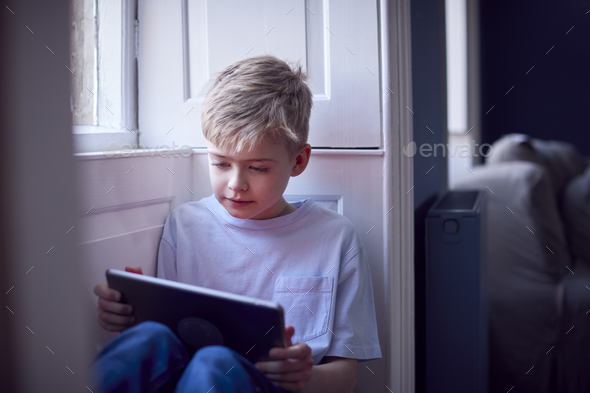

Meta and Snap Respond to EU Inquiry on Child Safety Measures

In a move towards unprecedented transparency, Meta and Snap are the latest tech companies to receive formal requests for information (RFI) from the European Commission regarding their efforts to safeguard minors on their platforms. The requests align with the requirements outlined in the bloc's Digital Services Act (DSA), emphasizing the EU's prioritization of child protection. This follows similar RFIs sent to TikTok and YouTube.

Designated as very large online platforms (VLOPs) and very large online search engines (VLOSEs) in April, Meta's social networks, including Facebook and Instagram, and Snap's messaging app Snapchat are among the 19 platforms under scrutiny. While the full DSA regime won't be operational until February next year, larger platforms are already expected to comply.

The RFIs specifically inquire about how Meta and Snap are fulfilling obligations related to risk assessments and mitigation measures for protecting minors online, with a focus on potential impacts on children's mental and physical health. Both companies have until December 1 to respond.

A Snap spokesperson stated that they share the goals of the EU and DSA to ensure age-appropriate, safe, and positive experiences on digital platforms. Meta emphasized its commitment to providing teens with a safe online environment, highlighting over 30 tools introduced to support teens and families.

This isn't the first RFI Meta has received under the DSA; recent inquiries also touched on the company's actions regarding illegal content, disinformation risks related to the Israel-Hamas conflict, and steps taken for election security.

The EU's focus on child protection and other priority areas, such as the Israel-Hamas war and election security, is evident in recent RFIs issued to platforms like AliExpress, probing measures for compliance with consumer protection obligations.